While different types of data-mining algorithms have been studied for classification of network traffics, the application of structure data mining on network flows remained almost unexplored. Also the number of protocol standards are getting numerous as more services and applications emerge into the market. Also interpretation of a certain protocol usage often requires relevant domain knowledge of the protocol.

Meanwhile, not all protocols specifications are public due to either security reasons or regulations that standardization organization imposes to keep open standard intact as much as possible. Also simply confirming traffic flow against protocol specification doesn’t always imply legitimate usage of the service some examples are XML XXE attacks and FTP bounce attacks all perfectly match to the protocol specifications.

The objective of this experiment is to examine the possibility of intrusion detection assuming that there is no prior knowledge about the protocol specification at hand. I expected this approach to have several advantages:

- Capability to check for anomalies in vendor-specific and closed protocols.

- Capability to detect suspicious usages of standard protocol as not all of features specified in protocol specification can be identified harmless depending on the context they are being used (i.e. XXE attacks ).

- Provides the opportunity the IDS to react more intelligently to detect anomalies making it even possible to detect unknown attacks.

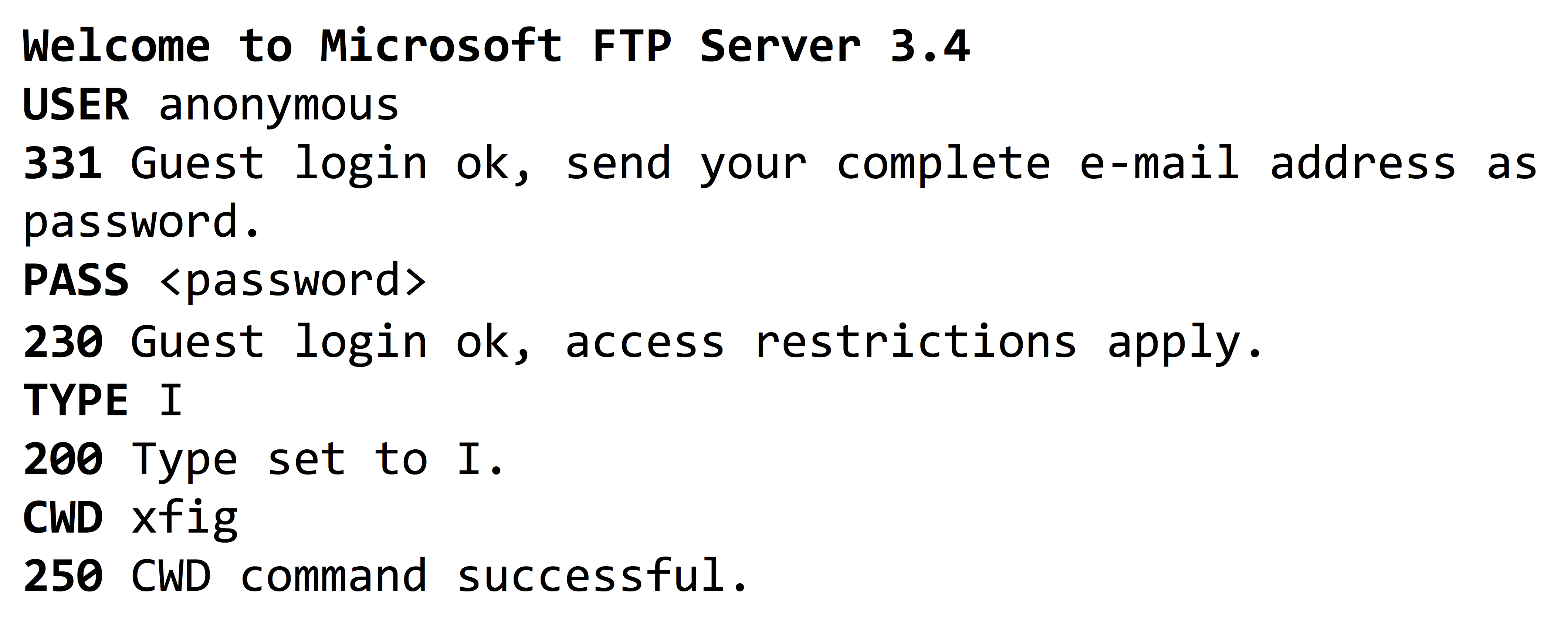

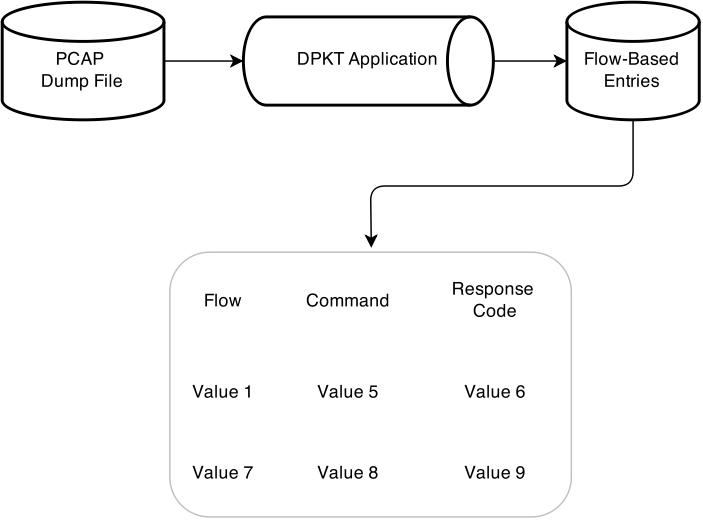

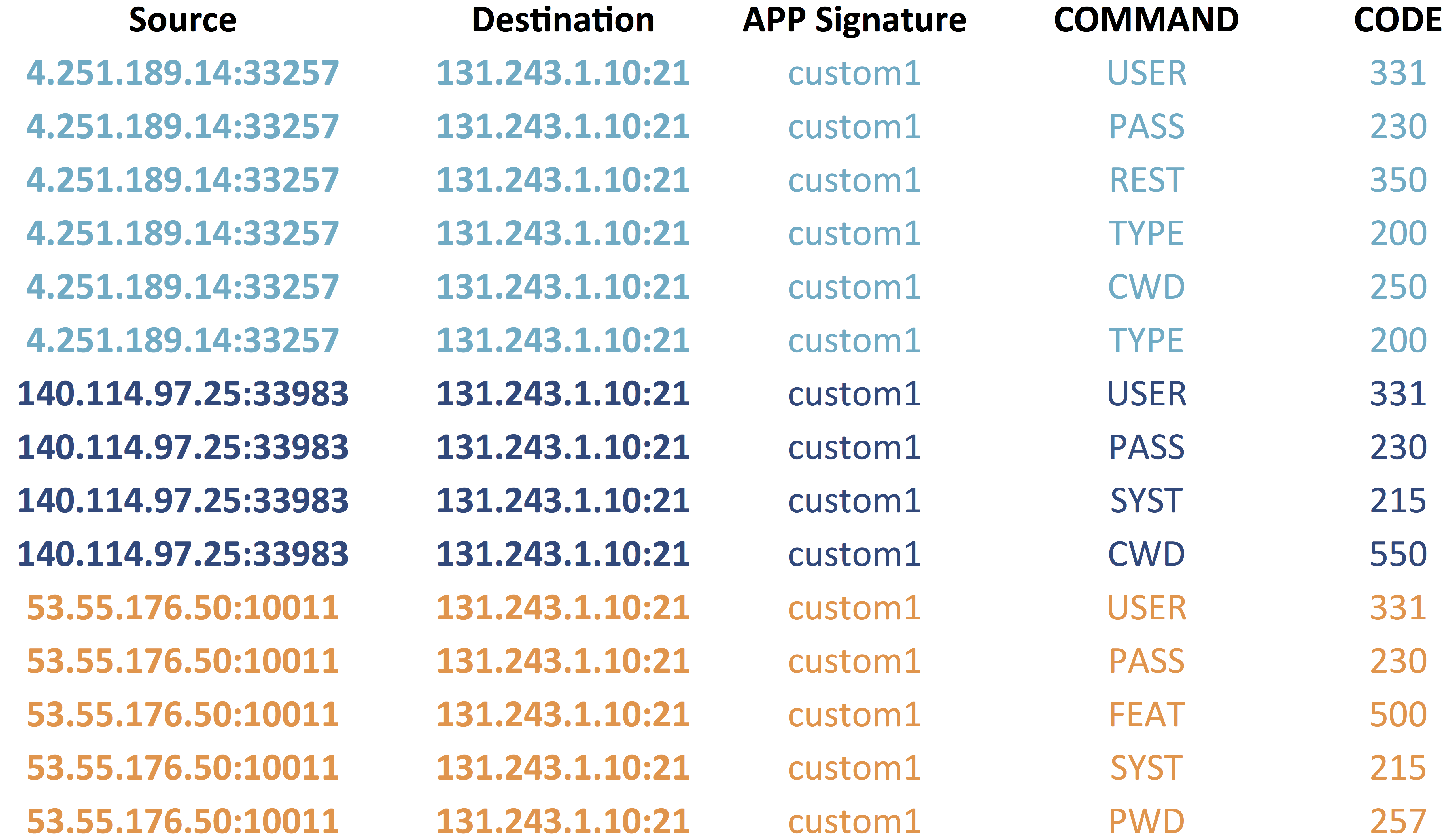

In order to induce the classifier we need a general hypothesis language to express typical interactions of users with FTP servers. We first started to extract application-layer features relevant from the PCAP dump file, we used DPKT Python library to aggregate packets into their relevant traffic flows, later we used regular expressions to extract FTP commands and their corresponding response code in each flow.

The first step would be to classify different flows in a Python dictionary, the Python code snippet below parses PCAP file and turns the it into dictionary where keys are the TCP tuple (source IP, destination IP, source port, destination port) and the values are accululated content of application traffic.

import dpkt

import socket

conn = dict() # Connections with current buffer

def parse_pcap_file(filename):

f = open(filename, 'rb')

pcap = dpkt.pcap.Reader(f)

for ts, buf in pcap:

eth = dpkt.ethernet.Ethernet(buf)

if eth.type != dpkt.ethernet.ETH_TYPE_IP:

continue

ip = eth.data

if ip.p != dpkt.ip.IP_PROTO_TCP:

continue

tcp = ip.data

tupl = ((ip.src, tcp.sport), (ip.dst , tcp.dport))

rtupl = ((ip.dst , tcp.dport), (ip.src, tcp.sport))

if tupl in conn:

conn[tupl] += tcp.data

elif rtupl in conn:

conn[rtupl] += tcp.data

else:

conn[tupl] = tcp.data

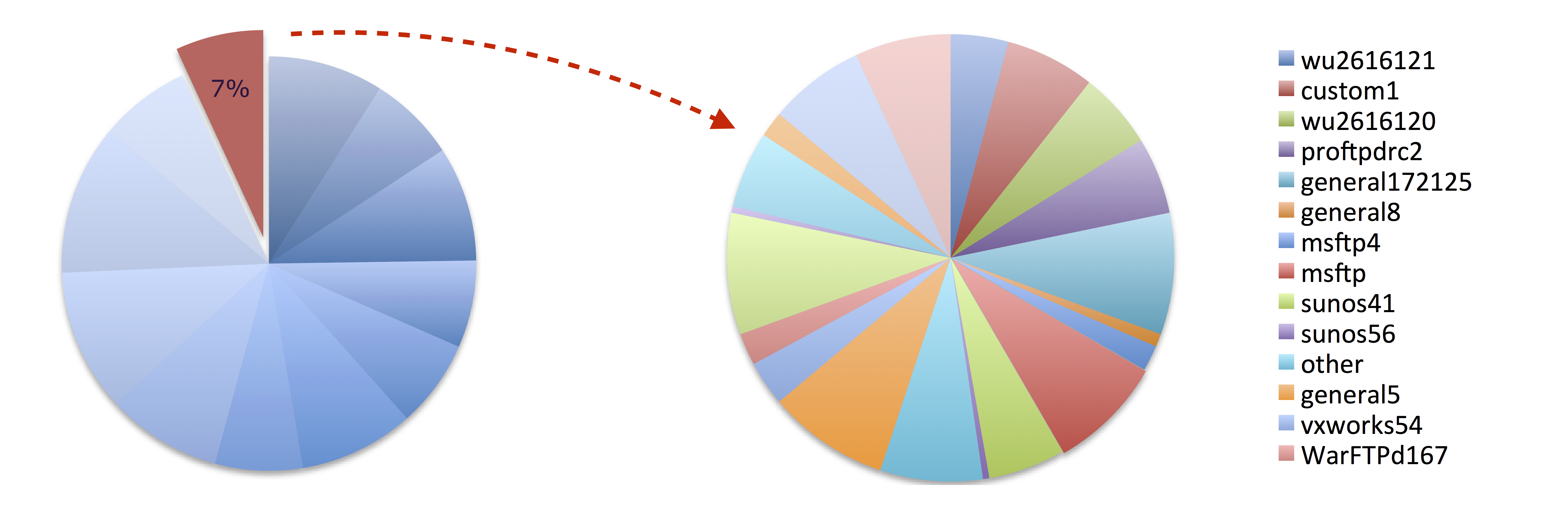

f.close()Once the dictionary object has been constructed I can furthre classify my traffic based on their content. In my case I need the know the exact implementation of FTP server. I already extracted distinct FTP signitures by parsing the payloads. The PCAP file is consisted of at least 64 different implementations of FTP distinguished by FTP servers’ welcome messages. Once different signatures detected each flow were corresponding FTP server label as a new attribute.

signitures = {

'\r\nYou do not have permission': 'nopermission',

'220 <*> FTP server (OEM FTPD version 1.01)': 'ftpd1.01',

'220 <*> FTP server (UNIX(r) System V Release 4.0)': 'unixsystemv',

'220 <*> FTP server (Version 4.125': 'ftp4125',

'220 <*> FTP server (WinQVT/Net v3.9)': 'ftpwinqvt',

'220 <*> Microsoft FTP Service (Version 3.0)': 'msftp3',

'220 <*> Microsoft FTP Service (Version 4.0)': 'msftp4',

'220 <*> Microsoft FTP Service (Version 5.0)': 'msftp5',

'220 <*>..<*>.<*> FTP server (Version wu-2.6.1-20)': 'wu261',

'220 <domain> FTP Server Ready': 'general2',

'220 <domain> FTP server (Compaq Tru64 UNIX Version 5.60)': 'true64',

'220 <domain> FTP server (Digital UNIX Version 5.60)': 'digitalunix',

'220 <domain> FTP server (Lawrence Berkeley National Laboratory': 'lawrence',

'220 <domain> FTP server (QVT/Net 4.0)': 'qvtnet4',

'220 <domain> FTP server (SunOS 4.1)': 'sunos41',

'220 <domain> FTP server (SunOS 5.6)': 'sunos56',

'220 <domain> FTP server (SunOS 5.7)': 'sunos57',

'220 <domain> FTP server (SunOS 5.8)': 'sunos58',

'220 <domain> FTP server (Version 1.7.212.2': 'general172122',

'220 <domain> FTP server (Version 1.7.212.5': 'general172125',

'220 <domain> FTP server (Version 6.00LS)': 'general6.00ls',

'220 <domain> FTP server (Version wu-2.6.1-0.6x.21)': 'wu2610621',

'220 <domain> FTP server (Version wu-2.6.1-16.7x.1)': 'wu2611671',

'220 <domain> FTP server (Version wu-2.6.1-20)': 'wu2616120',

'220 <domain> FTP server (Version wu-2.6.2(1)': 'wu2616121'

}Having different signitures at hand we can look into payloads for the FTP commands user sends to the server and the responses code they receive using some regular expressions and pattern matching in Python.

import re

for header, payload in conn.iteritems():

srcip = socket.inet_ntoa(header[0][0])

dstip = socket.inet_ntoa(header[1][0])

sport = header[0][1]

dport = header[1][1]

protocol = "other"

for signiture, label in signitures.iteritems():

if payload.startswith(signiture):

protocol = label

trans = []

for m in re.finditer('\r\n([a-zA-Z]+).*?\r\n(\d+) ', payload):

command = m.group(1).strip()

code = m.group(2).strip()

print '{}:{}-{}:{},{},{},{}'.format (srcip, sport, dstip, dport, protocol, command, code)The resulting dataset is something like this

Later we used a data-mining algorithm to train a classifier our dataset. The classifier must be general enough to cover most of our dataset at the same time and also leave room for valid user behavioral changes. It also must take into account the order of FTP commands. We first investigated rule induction algorithms and association rules. Association rules are not suitable hypothesis language to capture underling protocol behavior as capture only dependency of events not considering the sequential property of them. Sequential Pattern Mining algorithms introduced as a new method for discovering sequential patterns. The input data is a set of sequences called data sequences were each data sequence is a list of transactions and each transaction is a sets of items. Typically there is a transaction time associated with each transaction. A sequential pattern also consists of a list of sets of items [1]. Although this problem initially was motivated by applications in retailing industry but it seems a reasonably good match for capturing our underlying concept of legitimate network traffic. In our dataset, each transaction represents an FTP command along to its responding code. It worth to mention that all the items in an element of a sequential pattern must be present in a single transaction for the data sequence to support the pattern [1] For example in figure 1 [] PASS along with its response code of 230 appeared after USER command in all five rules. In first two cases PASS command appeared with response code of 230 which means it can only support instances having the same command and response code but the third rule appearing after USER command. But as it shows in rest of rules PASS command appeared with no corresponding return code so it can support all instances with a PASS command regardless of the value of response code.

These rules can be interpreted accordingly as one of these case scenarios where the FTP server * Authorizes user as an anonymous user (1, 2). * Users enters invalid username (3, 4) * User enters invalid username and later he also enters invalid password (5). All these rules matches with our understanding of possible interactions between users and FTP servers and anything out these cases can be considered suspicious interaction.

One of intrinsic features of GSP algorithm is that it eliminates redundant appearance of items in rules so no matter how many times an invalid username is inputted to the FTP server (USER, 331) it’s going to appear only once in the GSP rules. That is one of drawbacks of this approach, which makes it ineffective against Brute-Force or application-layer DoS attacks.